Table of contents

-

What is Maximum Likelihood Estimation?

1.1. The Main Idea

1.2. Diffusion Process

1.3. Model Architecture

-

2.1. The Main Idea

2.2. Diffusion Process

2.3. Model Architecture

Recall Convolution Neural Networks: Upsampling, Downsampling, and Deconvolution [1]

Tradition CNNs are used to compress and extract images’ features.

1. Upsampling, Downsampling and Dilation

- Both of them is to expand/compress the input. Some techniques are widely used including padding (upsampling), stride (downsampling), dilations (downsampling).

- In the dilation, the edge pieces of the kernel are pushed further away from the center piece.

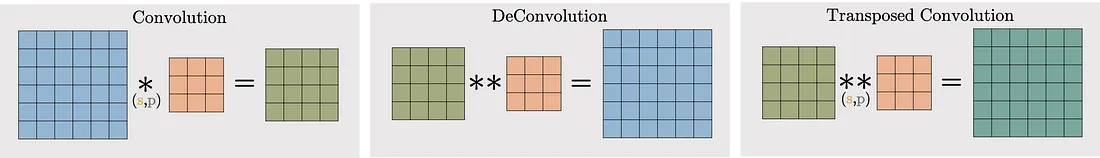

2. Transposed Convolution

- is upsampling in nature. The layer will conduct operation on a modified input by calculating and adding 0’s. [2]

- Applications: are to reconstruct images (e.g., Generator in GANs, encoders,…)

- Difference between Transposed Convolution vs Deconvolution:

- Deconvolution can reverse the Convolution output to get the exact same input, while Transposed Convolution does not give the same output as the input.

NOTE: The effects of Downsampling and Upsampling will be reversed if they are applied to Transposed Convolution.

Generative Adversarial Networks

Training

- Step 1: Discriminator: tries to maximize $log(D(x)) + log(1-D(G(z)))$

- Step2: Generator: tries to minimize $log(1-D(G(z)))$ or maximize $log((G(z)))$

- The below notebook is an implementation of DCGAN, which uses convolution and transpose convolution layers in the Discriminator and Generator, respectively.

Notebook with detailed documents